Mingcong Liu

Y-tech, Kuaishou Technology, Beijing, China

I am an algorithm engineer at Y-tech, Kwai (Kuaishou), working on computer vision, machine learning, and computational photography. My research interests include generative modeling, image enhancement, and unsupervised learning.

I received B.S. and M.S. in Optical Engineering, both at Beijing Institute of Technology. During my Master’s program, I studied the enhancement and visualization of thermal images under the supervision of Prof. Weiqi Jin.

News

| Dec 3, 2021 |

The AAHQ dataset has been released!

|

| Oct 25, 2021 |

Our BlendGAN paper is accepted to NeurIPS’21

|

Selected Publications

-

NeurIPS

NeurIPS

BlendGAN: Implicitly GAN Blending for Arbitrary Stylized Face Generation

Advances in Neural Information Processing Systems (NeurIPS), 2021

Generative Adversarial Networks (GANs) have made a dramatic leap in high-fidelity image synthesis and stylized face generation. Recently, a layer-swapping mechanism has been developed to improve the stylization performance. However, this method is incapable of fitting arbitrary styles in a single model and requires hundreds of style-consistent training images for each style. To address the above issues, we propose BlendGAN for arbitrary stylized face generation by leveraging a flexible blending strategy and a generic artistic dataset. Specifically, we first train a self-supervised style encoder on the generic artistic dataset to extract the representations of arbitrary styles. In addition, a weighted blending module (WBM) is proposed to blend face and style representations implicitly and control the arbitrary stylization effect. By doing so, BlendGAN can gracefully fit arbitrary styles in a unified model while avoiding case-by-case preparation of style-consistent training images. To this end, we also present a novel large-scale artistic face dataset AAHQ. Extensive experiments demonstrate that BlendGAN outperforms state-of-the-art methods in terms of visual quality and style diversity for both latent-guided and reference-guided stylized face synthesis.

-

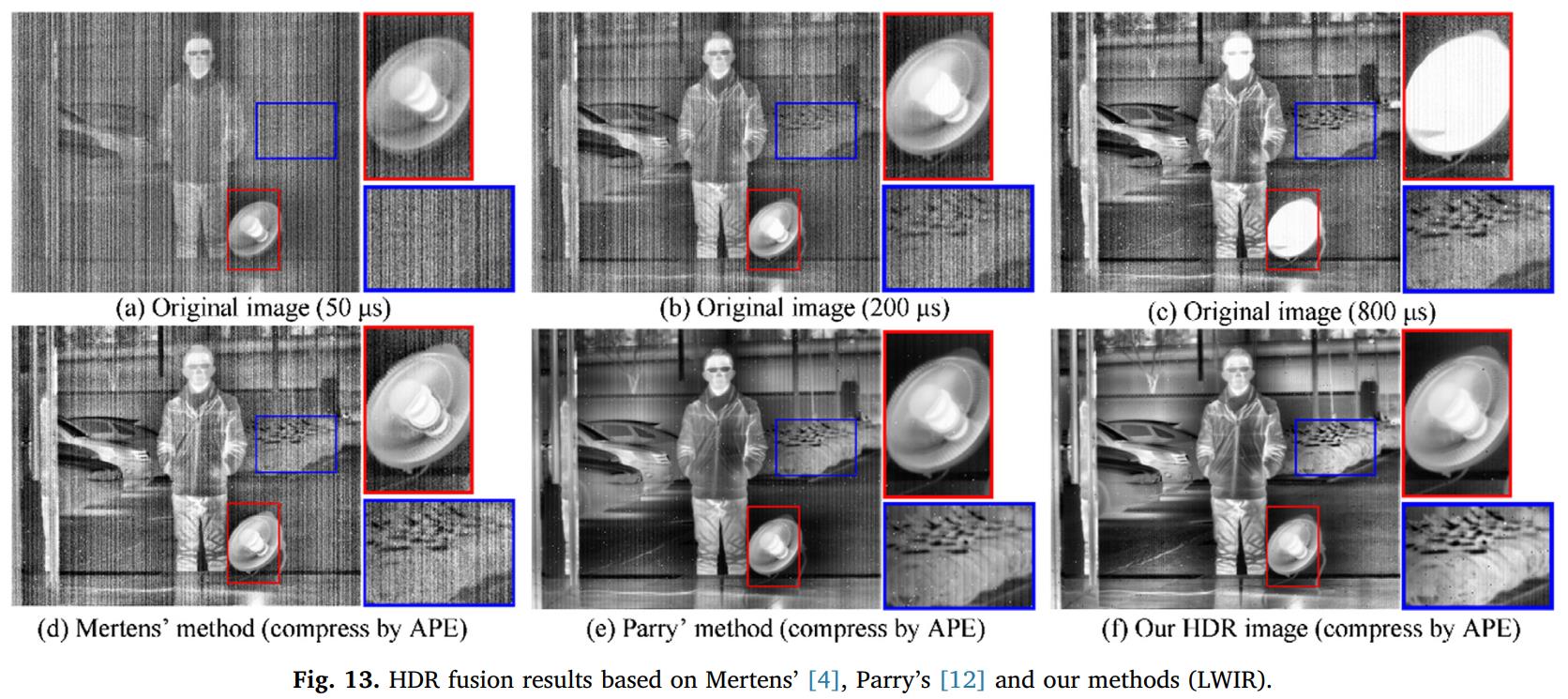

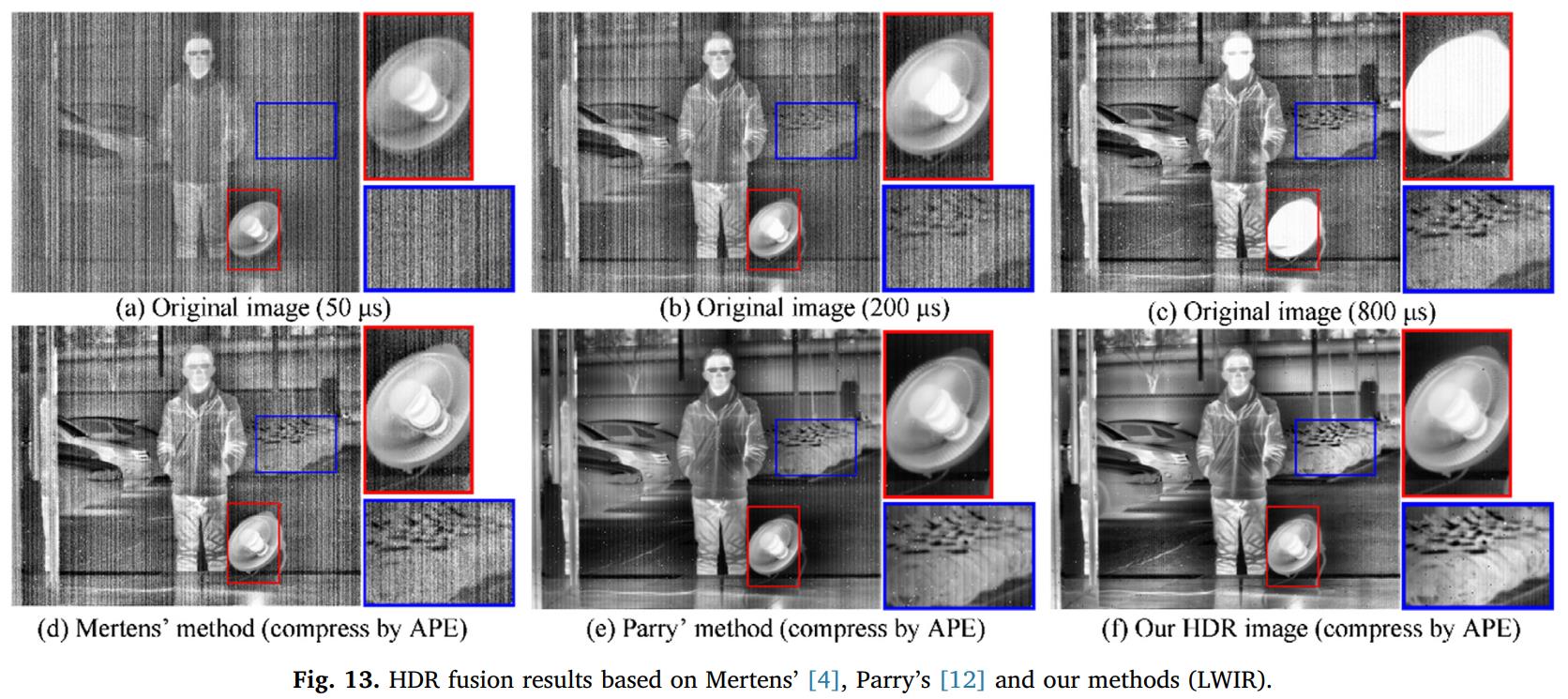

Fusion algorithm based on grayscale-gradient estimation for infrared images with multiple integration times

Shuo Li, Weiqi Jin, Li Li,

Mingcong Liu, and Jianguo Yang

Infrared Physics & Technology (IPT), 2020

In high dynamic range (HDR) scenes containing strong local radiation, infrared images acquired with a single integration time cannot preserve the details of both bright and dark regions due to the limited dynamic range of the detector. Fusing multiple infrared images captured with variable integration times is an effective method for extending the dynamic range of infrared imaging systems. Fusion algorithms are critical to the visual quality of the results of this technique. In this paper, we propose a fusion algorithm based on grayscale-gradient estimation for infrared images with multiple integration times. In our algorithm, an objective grayscale image and an objective gradient map are first estimated, then they are substituted into the optimization framework for image fusion, and finally, a fused image with appropriate grayscale and gradient distribution is obtained by solving a minimization problem. Experiments show that the proposed algorithm works well under both normal and HDR infrared scenarios. Compared with existing typical multiple exposure fusion algorithms, the proposed algorithm produces better results in terms of noise suppression, visual information fidelity and perceptual quality. Therefore, the proposed algorithm has potential in thermal vision applications involving high dynamic range scenarios and has a high reference value for research in HDR thermal imaging technology.

-

Applied Optics

Applied Optics

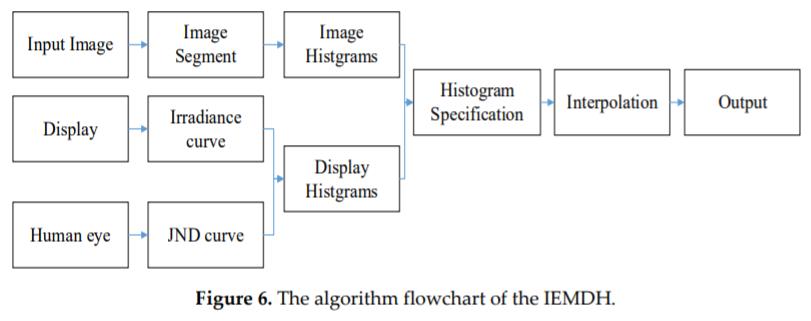

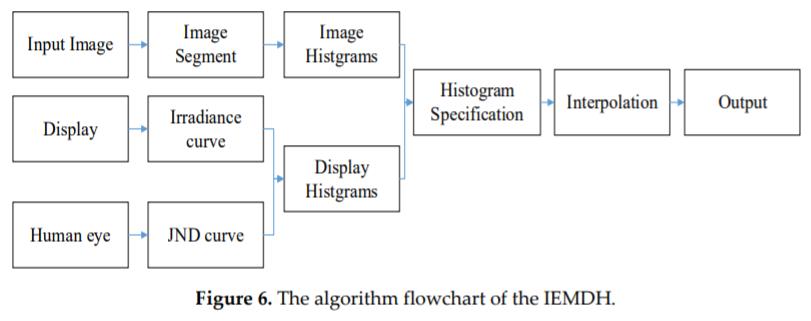

Image contrast enhancement method based on display and human visual system characteristics

Guo Chen, Li Li, Weiqi Jin,

Mingcong Liu, and Feng Shi

Applied Optics (Applied Optics), 2019

At the present time, there are many image contrast enhancement methods where the main considerations are detail enhancement, noise suppression, and high contrast suppression. Traditional methods ignore the characteristics of the display or merely consider the display as a whole. However, due to the limited dynamic range of most display devices on the market, the difference between two adjacent grayscales of the display is often below the just noticeable difference of the human visual systems, which causes many image details to be invisible on the display. To solve this problem, we present a preprocessing method for image contrast enhancement. The method combines the characteristics of the human eye and the display to enhance the image by examining the local histogram. When displaying the processed image, the algorithm maintains as much image information as possible, and image details will not be lost due to the limits of the display device. Moreover, this algorithm performs well for noise suppression and high contrast suppression. The algorithm is an image enhancement method and can also be a correction method for images enhanced by other methods when prepared for display.

-

IPT

IPT

Infrared HDR image fusion based on response model of cooled IRFPA under variable integration time

Mingcong Liu, Shuo Li, Li Li, Weiqi Jin, and Guo Chen

Infrared Physics & Technology (IPT), 2018

The multi-exposure fusion method is an effective way to extend the dynamic range of the infrared focal plane array (IRFPA), but the traditional method doesn’t take into account the impact of the integration time on every pixel’s response function, thereby introducing nonuniform noises and affecting the fusion quality. Based on the traditional response model of an infrared detector, this article derives the relationship between the response function and the integration time by introducing new influence factors, and conducts verification experiments with MW and LW thermal cameras. The experimental results are consistent with the proposed model, which shows that, within the linear response range of the detector, the gain parameters of the pixels are independent of the integration time, and the offset parameters are approximately inversely proportional to it when the ambient temperature is determined. Meanwhile, based on the results, an infrared HDR image fusion method under a variable integration time is studied. The resulting images retain more details of the bright and dark areas of the scene, and the nonuniformity can be corrected to some extent at the same time. This proves that the model proposed in this paper is effective for extending the dynamic range of the IRFPA and has theoretical significance and practical value for further HDR thermal imaging research.

NeurIPS

NeurIPS

Applied Optics

Applied Optics

IPT

IPT